Accessible Learning:

Designing for Every Mind

"When we design for the margins, we improve life for all." — Angela Glover Blackwell, PolicyLink

Can design translate

emotion without relying

on sight?

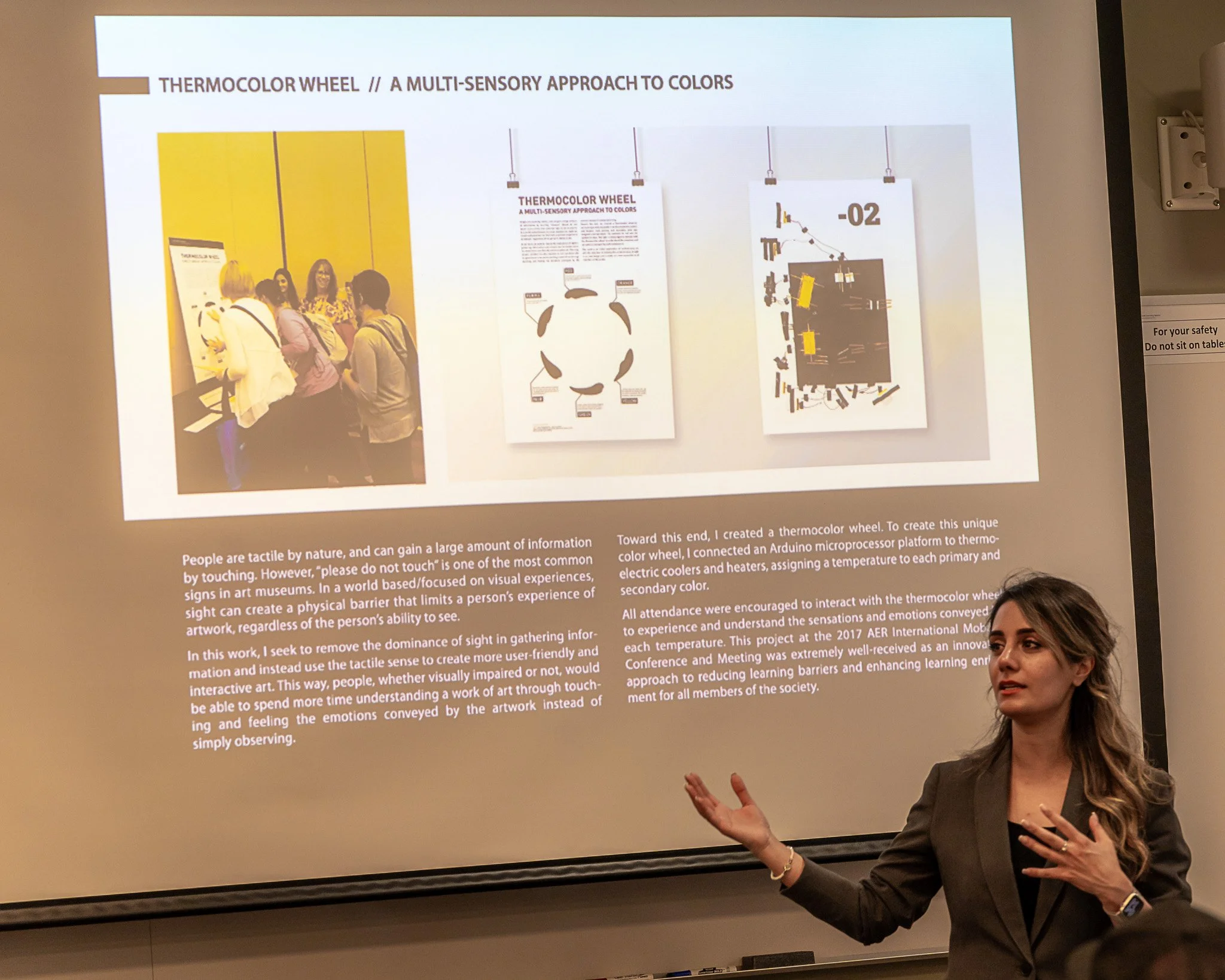

The Thermocolor Wheel invites visitors to experience color through touch. Using an Arduino microprocessor connected to thermoelectric coolers and heaters, each primary and secondary color is represented by sound and temperature. Presented at the 2017 AER International Mobility Conference, the project reimagined how tactile interaction can reduce learning barriers and enrich experiences for both sighted and visually impaired audiences.

For Claire

At the School for the Blind's library, I discovered a critical design failure: picture books with Braille text and random fabric swatches that had no connection to the actual content. A book about the ocean had rough burlap while a story about puppies featured smooth satin. This disconnect revealed an opportunity for meaningful multi-sensory design. The Thermocolor Wheel prototype addressed this gap by creating logical, learnable connections between temperature, audio descriptions, and color. Through 7 design iterations and testing with 25+ users, I increased accurate color identification from 40% to 85% within a 5-minute learning period. The Arduino-based prototype combined thermoelectric modules for precise temperature control (18°C for blue, 32°C for red) with contextual audio descriptions that helped users build mental models of each color.

Beyond single-sense design

This project fundamentally shifted my approach to product design. Testing revealed that users performed better with redundant sensory channels, but more importantly, different users relied on different primary inputs depending on their context. A sighted person in a dark room relied on temperature. Someone wearing gloves focused on audio. This insight now drives all my design work: always provide multiple pathways to the same information. It's not about special accommodations; it's about recognizing that user context constantly changes. The parent checking their phone in a dark nursery, the runner adjusting settings mid-workout, the student reviewing materials on a crowded bus. When we design for variable contexts from the start, we create products that actually work in the real world.

Scaling personalization through AI

In my classroom today, I apply these same principles using AI to create adaptive learning at scale. My students include long-distance commuters who can only study through audio, visual processors who get lost in text-heavy materials, and working professionals who need information in five-minute chunks between meetings. I use AI tools to automatically generate podcast versions of all readings, create visual concept maps from text chapters, and break down video lectures into searchable, timestamped segments with interactive transcripts. Analytics show that given these options, students engage with materials 3x more frequently and complete courses at a 40% higher rate. This isn't about labeling learners or creating special tracks. It's about building flexible systems that let users choose what works for their current context, whether that's a noisy train, a quick lunch break, or a late-night study session after the kids are asleep.